Correspondence Networks with Adaptive Neighbourhood Consensus

1Active Vision Lab & 2Visual Geometry Group

Department of Engineering Science, University of Oxford

* indicates equal contribution

|

Abstract

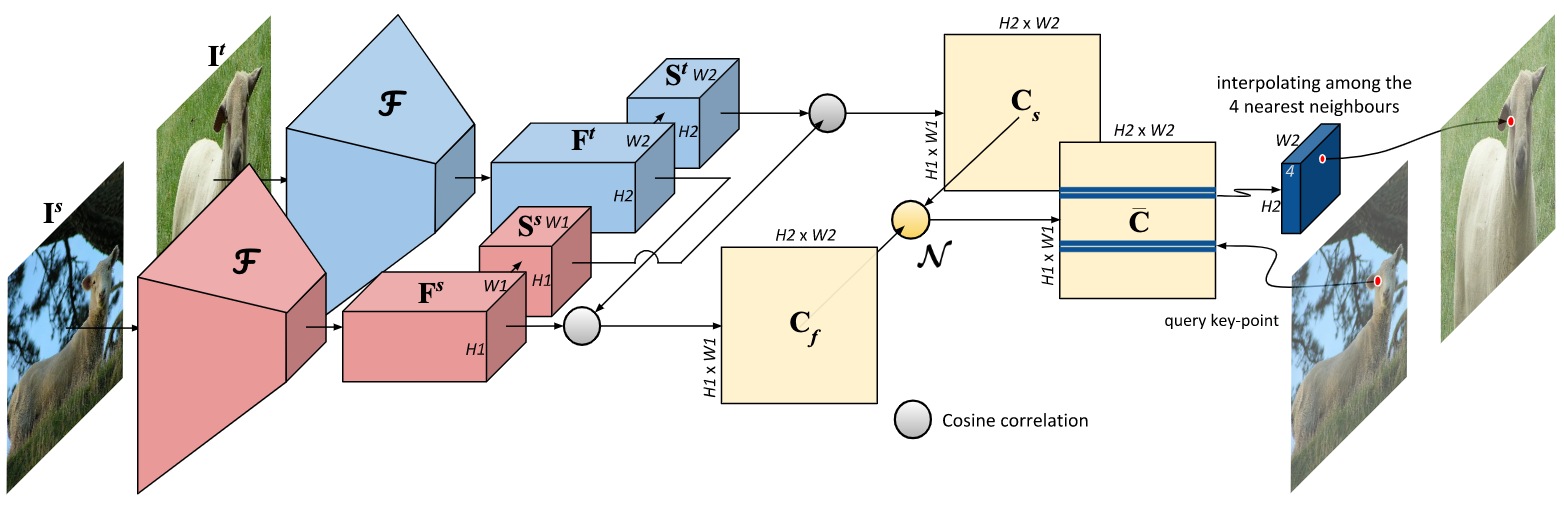

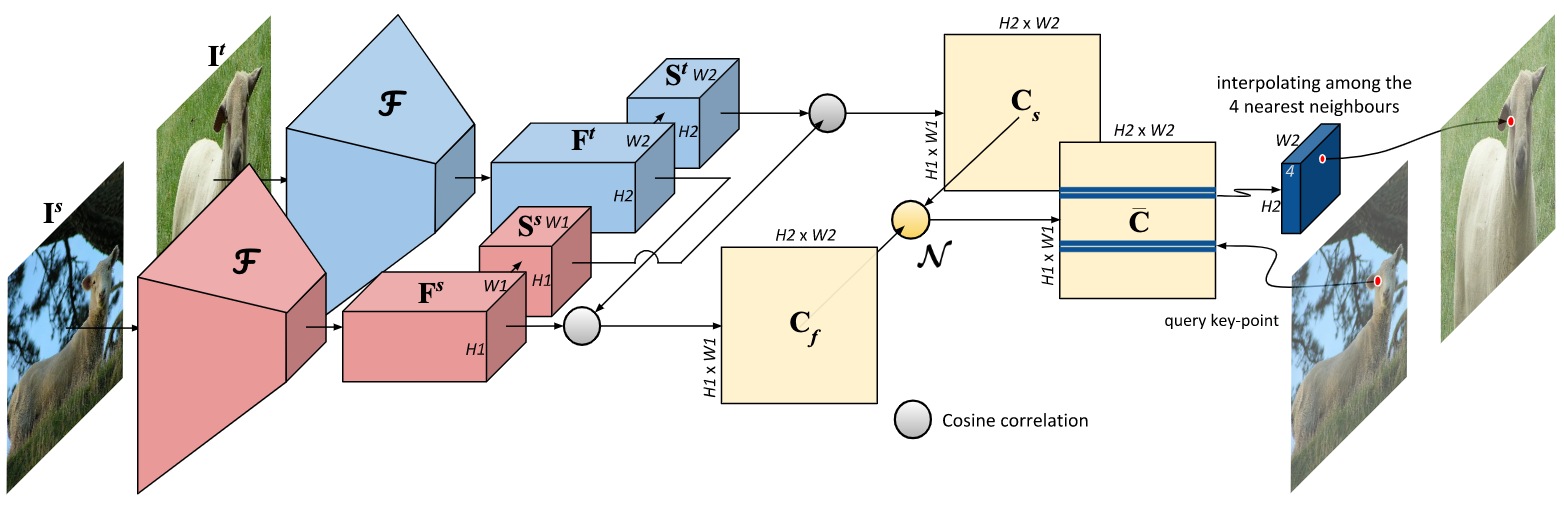

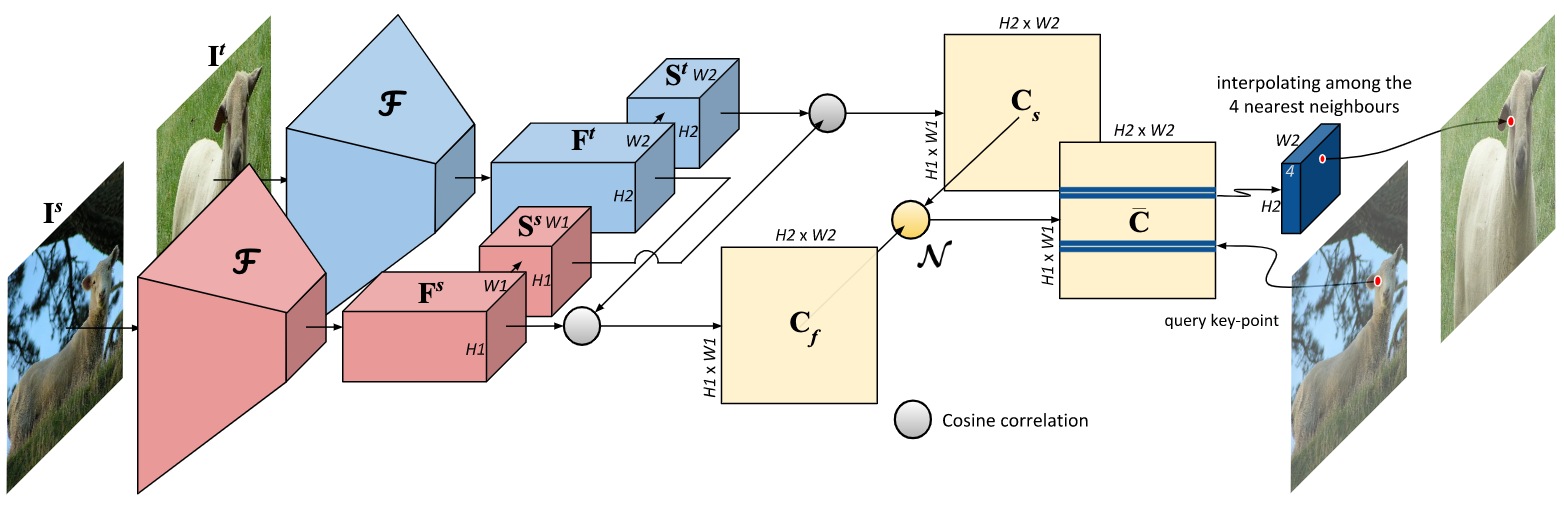

In this paper, we tackle the task of establishing dense visual correspondences between images containing

objects of the same category.

This is a challenging task due to large intra-class variations and a lack of dense pixel level annotations.

We propose a convolutional neural network architecture, called adaptive neighbourhood consensus network

(ANC-Net), that can be trained end-to-end with sparse key-point annotations, to handle this challenge.

At the core of ANC-Net is our proposed non-isotropic 4D convolution kernel, which forms the building block

for the adaptive neighbourhood consensus module for robust matching.

We also introduce a simple and efficient multi-scale self-similarity module in ANC-Net to make the learned

feature robust to intra-class variations.

Furthermore, we propose a novel orthogonal loss that can enforce the one-to-one matching constraint.

We thoroughly evaluate the effectiveness of our method on various benchmarks, where it substantially

outperforms state-of-the-art methods.

BibTex

@inproceedings{Li2020Correspondence,

author = {Shuda Li and Kai Han and Theo W. Costain and Henry Howard-Jenkins and Victor Prisacariu},

title = {Correspondence Networks with Adaptive Neighbourhood Consensus},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2020},

}

Acknowledgments

We gratefully acknowledge the support of the European Commission Project Multiple-actOrs Virtual

EmpathicCARegiver for the Elder (MoveCare) and the EPSRC Programme Grant Seebibyte EP/M013774/1.

|

Webpage template borrowed from Split-Brain Autoencoders,

CVPR 2017.